Details

In Computational Science and Engineering (CSE), we observe among the zoo of dramatic changes three important trends: An increase in parallelism, a change from batch-based computing done by computational scientists to integrated, interactive computing done by application experts, and software built up upon multiple well-established components.

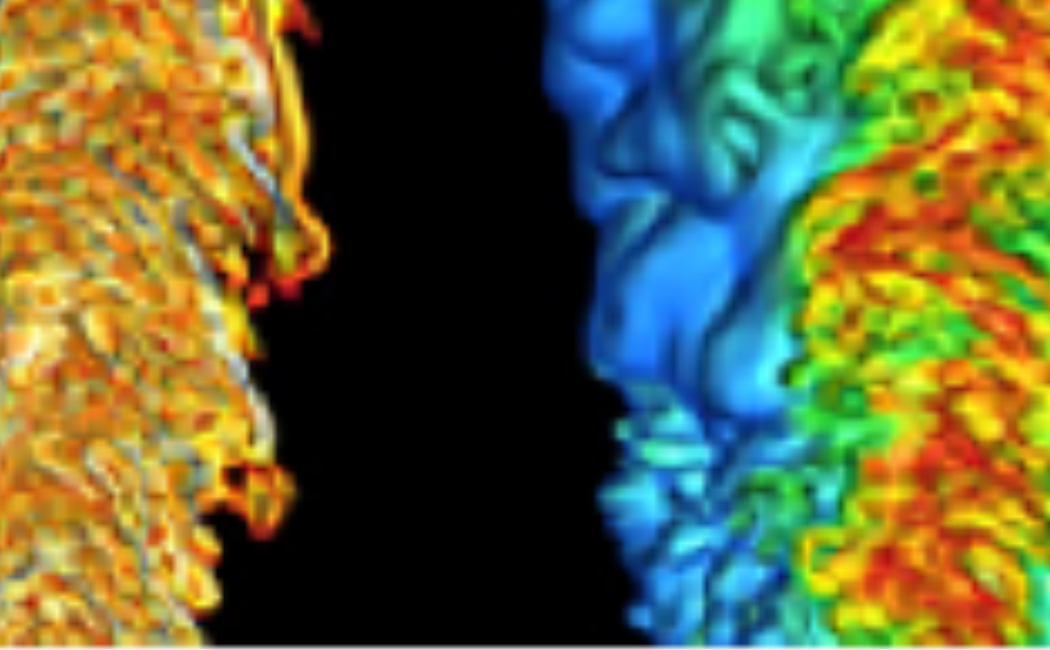

The increase in parallelism either stems from heterogeneous architectures where accelerators such as graphics cards augment the principal computing nodes, or it stems from machines equipped with thousands of simple(r) (without out-of-order execution or L2 caches, e.g.) slow microprocessors. An example for the latter is the BlueGene/P architecture underlying Shaheen. The usage paradigm change stems from an increase in application complexity – up to today, scientists and engineers often prescribe computational experiments, the people writing the simulation software conduct the experiments, and, finally, the results are given back to the application experts. With software simulating extremely complex phenomena with thousands of parameters (many of them hardly known or just estimated), this is not a well-suited way to go. Instead, scientists want to have an instantaneous feedback on the simulation progress, want to control and supervise the simulation, and they want to interact with the simulation’s parameters without being bothered with too many technical details. Computational steering is one term summarizing these needs. The component composition stems from the fact that is economically not feasible to write each simulation code from scratch, neither does is make sense in terms of software quality, performance tuning, and time-to-new-insight. As an example, the Reservoir Simulation Toolbox (RST) developed at KAUST is designed to simulate complex reservoir systems at the porous media continuum scale (Darcy scale). However, since complex Darcy-scale processes are usually stemming from pore scale phenomena, it is important to try to couple different scales models to ensure correct representation of reality. The coupling of Darcy scale modeling (e.g. RST) with Navier-Stokes solvers (e.g. Peano) is an example. Multiscale coupling of a Navier-Stokes solver with a Darcy simulator will allow novel investigation of many interesting subsurface quantities and phenomena such as absolute permeabilities, relative permeabilities, capillary pressure etc through large-scale computations (instead of lab experiments). At the same time, the demand for improved resolutions poses hard challenges on the scalability and performance of simulation codes such as Peano. Finally, such long-lasting simulations have to be able to reveal their results on-the-fly to the application expert, use sophisticated scaling visualization techniques, and up-to-date visualization devices. Summarizing, the three trends induce requirements both for the scientific computing core labs and the users of these facilities: High performance computing (HPC) researchers have to hold available codes that fit to massively parallel computers and are easy to use, and the people using visualization and supercomputing equipment have to work closely together with experts from both laboratories to allow application experts later to run their codes directly without a programmer acting as medium. The KAUST laboratories provide the necessary equipment and infrastructure in a unique way – outstanding equipment installed next to each other with experts around. Now, it is up to the computational scientists to use this infrastructure.